爬取的站點:http://beijing.8684.cn/

(1)環境配置,直接上代碼:

# -*- coding: utf-8 -*-import requests ##導入requestsfrom bs4 import BeautifulSoup ##導入bs4中的BeautifulSoupimport osheaders = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.221 Safari/537.36 SE 2.X MetaSr 1.0'}all_url = 'http://beijing.8684.cn' ##開始的URL地址start_html = requests.get(all_url, headers=headers) #print (start_html.text)Soup = BeautifulSoup(start_html.text, 'lxml') # 以lxml的方式解析html文檔(2)爬取站點分析

1、北京市公交線路分類方式有3種:

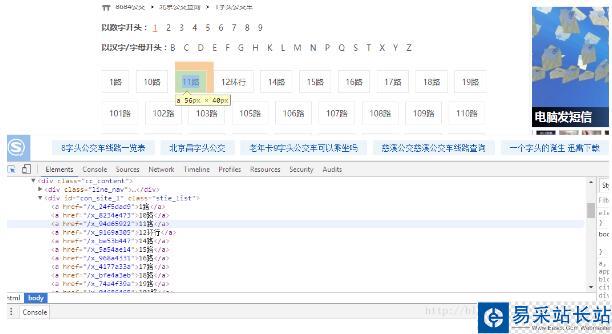

本文通過數字開頭來進行爬取,“F12”啟動開發者工具,點擊“Elements”,點擊“1”,可以發現鏈接保存在<div class="bus_kt_r1">里面,故只需要提取出div里的href即可:

代碼:

all_a = Soup.find(‘div',class_='bus_kt_r1').find_all(‘a')

2、接著往下,發現每1路的鏈接都在<div id="con_site_1" class="site_list"> 的<a>里面,取出里面的herf即為線路網址,其內容即為線路名稱,代碼:

href = a['href'] #取出a標簽的href 屬性html = all_url + hrefsecond_html = requests.get(html,headers=headers)#print (second_html.text)Soup2 = BeautifulSoup(second_html.text, 'lxml') all_a2 = Soup2.find('div',class_='cc_content').find_all('div')[-1].find_all('a') # 既有id又有class的div不知道為啥取不出來,只好迂回取了3、打開線路鏈接,就可以看到具體的站點信息了,打開頁面分析文檔結構后發現:線路的基本信息存放在<div class="bus_i_content">里面,而公交站點信息則存放在<div class="bus_line_top">及<div class="bus_line_site">里面,提取代碼:

title1 = a2.get_text() #取出a1標簽的文本href1 = a2['href'] #取出a標簽的href 屬性#print (title1,href1)html_bus = all_url + href1 # 構建線路站點urlthrid_html = requests.get(html_bus,headers=headers)Soup3 = BeautifulSoup(thrid_html.text, 'lxml') bus_name = Soup3.find('div',class_='bus_i_t1').find('h1').get_text() # 提取線路名bus_type = Soup3.find('div',class_='bus_i_t1').find('a').get_text() # 提取線路屬性bus_time = Soup3.find_all('p',class_='bus_i_t4')[0].get_text() # 運行時間bus_cost = Soup3.find_all('p',class_='bus_i_t4')[1].get_text() # 票價bus_company = Soup3.find_all('p',class_='bus_i_t4')[2].find('a').get_text() # 公交公司bus_update = Soup3.find_all('p',class_='bus_i_t4')[3].get_text() # 更新時間bus_label = Soup3.find('div',class_='bus_label')if bus_label: bus_length = bus_label.get_text() # 線路里程else: bus_length = []#print (bus_name,bus_type,bus_time,bus_cost,bus_company,bus_update)all_line = Soup3.find_all('div',class_='bus_line_top') # 線路簡介all_site = Soup3.find_all('div',class_='bus_line_site')# 公交站點line_x = all_line[0].find('div',class_='bus_line_txt').get_text()[:-9]+all_line[0].find_all('span')[-1].get_text()sites_x = all_site[0].find_all('a')sites_x_list = [] # 上行線路站點for site_x in sites_x: sites_x_list.append(site_x.get_text())line_num = len(all_line)if line_num==2: # 如果存在環線,也返回兩個list,只是其中一個為空 line_y = all_line[1].find('div',class_='bus_line_txt').get_text()[:-9]+all_line[1].find_all('span')[-1].get_text() sites_y = all_site[1].find_all('a') sites_y_list = [] # 下行線路站點 for site_y in sites_y: sites_y_list.append(site_y.get_text())else: line_y,sites_y_list=[],[]information = [bus_name,bus_type,bus_time,bus_cost,bus_company,bus_update,bus_length,line_x,sites_x_list,line_y,sites_y_list]

新聞熱點

疑難解答