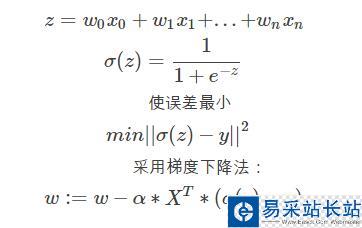

用一條直線對數據進行擬合的過程稱為回歸。邏輯回歸分類的思想是:根據現有數據對分類邊界線建立回歸公式。

公式表示為:

一、梯度上升法

每次迭代所有的數據都參與計算。

for 循環(huán)次數:

訓練

代碼如下:

import numpy as npimport matplotlib.pyplot as pltdef loadData(): labelVec = [] dataMat = [] with open('testSet.txt') as f: for line in f.readlines(): dataMat.append([1.0,line.strip().split()[0],line.strip().split()[1]]) labelVec.append(line.strip().split()[2]) return dataMat,labelVecdef Sigmoid(inX): return 1/(1+np.exp(-inX))def trainLR(dataMat,labelVec): dataMatrix = np.mat(dataMat).astype(np.float64) lableMatrix = np.mat(labelVec).T.astype(np.float64) m,n = dataMatrix.shape w = np.ones((n,1)) alpha = 0.001 for i in range(500): predict = Sigmoid(dataMatrix*w) error = predict-lableMatrix w = w - alpha*dataMatrix.T*error return wdef plotBestFit(wei,data,label): if type(wei).__name__ == 'ndarray': weights = wei else: weights = wei.getA() fig = plt.figure(0) ax = fig.add_subplot(111) xxx = np.arange(-3,3,0.1) yyy = - weights[0]/weights[2] - weights[1]/weights[2]*xxx ax.plot(xxx,yyy) cord1 = [] cord0 = [] for i in range(len(label)): if label[i] == 1: cord1.append(data[i][1:3]) else: cord0.append(data[i][1:3]) cord1 = np.array(cord1) cord0 = np.array(cord0) ax.scatter(cord1[:,0],cord1[:,1],c='red') ax.scatter(cord0[:,0],cord0[:,1],c='green') plt.show()if __name__ == "__main__": data,label = loadData() data = np.array(data).astype(np.float64) label = [int(item) for item in label] weight = trainLR(data,label) plotBestFit(weight,data,label)二、隨機梯度上升法

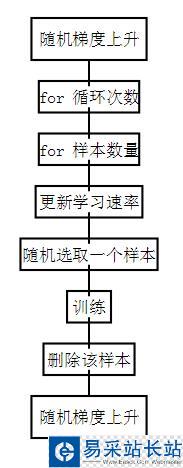

1.學習參數隨迭代次數調整,可以緩解參數的高頻波動。

2.隨機選取樣本來更新回歸參數,可以減少周期性的波動。

for 循環(huán)次數:

for 樣本數量:

更新學習速率

隨機選取樣本

訓練

在樣本集中刪除該樣本

代碼如下:

import numpy as npimport matplotlib.pyplot as pltdef loadData(): labelVec = [] dataMat = [] with open('testSet.txt') as f: for line in f.readlines(): dataMat.append([1.0,line.strip().split()[0],line.strip().split()[1]]) labelVec.append(line.strip().split()[2]) return dataMat,labelVecdef Sigmoid(inX): return 1/(1+np.exp(-inX))def plotBestFit(wei,data,label): if type(wei).__name__ == 'ndarray': weights = wei else: weights = wei.getA() fig = plt.figure(0) ax = fig.add_subplot(111) xxx = np.arange(-3,3,0.1) yyy = - weights[0]/weights[2] - weights[1]/weights[2]*xxx ax.plot(xxx,yyy) cord1 = [] cord0 = [] for i in range(len(label)): if label[i] == 1: cord1.append(data[i][1:3]) else: cord0.append(data[i][1:3]) cord1 = np.array(cord1) cord0 = np.array(cord0) ax.scatter(cord1[:,0],cord1[:,1],c='red') ax.scatter(cord0[:,0],cord0[:,1],c='green') plt.show()def stocGradAscent(dataMat,labelVec,trainLoop): m,n = np.shape(dataMat) w = np.ones((n,1)) for j in range(trainLoop): dataIndex = range(m) for i in range(m): alpha = 4/(i+j+1) + 0.01 randIndex = int(np.random.uniform(0,len(dataIndex))) predict = Sigmoid(np.dot(dataMat[dataIndex[randIndex]],w)) error = predict - labelVec[dataIndex[randIndex]] w = w - alpha*error*dataMat[dataIndex[randIndex]].reshape(n,1) np.delete(dataIndex,randIndex,0) return wif __name__ == "__main__": data,label = loadData() data = np.array(data).astype(np.float64) label = [int(item) for item in label] weight = stocGradAscent(data,label,300) plotBestFit(weight,data,label)

新聞熱點

疑難解答