前言

這幾天caffe2發布了,支持移動端,我理解是類似單片機的物聯網吧應該不是手機之類的,試想iphone7跑CNN,畫面太美~

作為一個剛入坑的,甚至還沒入坑的人,咱們還是老實研究下tensorflow吧,雖然它沒有caffe好上手。tensorflow的特點我就不介紹了:

基于Python,寫的很快并且具有可讀性。 支持CPU和GPU,在多GPU系統上的運行更為順暢。 代碼編譯效率較高。 社區發展的非常迅速并且活躍。 能夠生成顯示網絡拓撲結構和性能的可視化圖。tensorflow(tf)運算流程:

tensorflow的運行流程主要有2步,分別是構造模型和訓練。

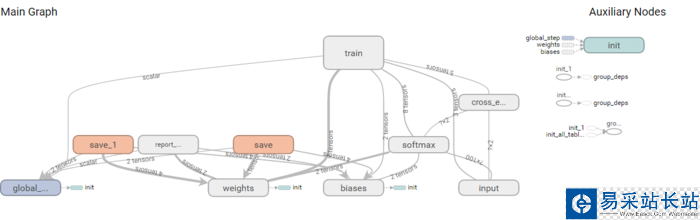

在構造模型階段,我們需要構建一個圖(Graph)來描述我們的模型,tensoflow的強大之處也在這了,支持tensorboard:

就類似這樣的圖,有點像流程圖,這里還推薦一個google的tensoflow游樂場,很有意思。

然后到了訓練階段,在構造模型階段是不進行計算的,只有在tensoflow.Session.run()時會開始計算。

文本分類

先給出代碼,然后我們在一一做解釋

# -*- coding: utf-8 -*-import pandas as pdimport numpy as npimport tensorflow as tffrom collections import Counterfrom sklearn.datasets import fetch_20newsgroupsdef get_word_2_index(vocab): word2index = {} for i,word in enumerate(vocab): word2index[word] = i return word2indexdef get_batch(df,i,batch_size): batches = [] results = [] texts = df.data[i*batch_size : i*batch_size+batch_size] categories = df.target[i*batch_size : i*batch_size+batch_size] for text in texts: layer = np.zeros(total_words,dtype=float) for word in text.split(' '): layer[word2index[word.lower()]] += 1 batches.append(layer) for category in categories: y = np.zeros((3),dtype=float) if category == 0: y[0] = 1. elif category == 1: y[1] = 1. else: y[2] = 1. results.append(y) return np.array(batches),np.array(results)def multilayer_perceptron(input_tensor, weights, biases): #hidden層RELU函數激勵 layer_1_multiplication = tf.matmul(input_tensor, weights['h1']) layer_1_addition = tf.add(layer_1_multiplication, biases['b1']) layer_1 = tf.nn.relu(layer_1_addition) layer_2_multiplication = tf.matmul(layer_1, weights['h2']) layer_2_addition = tf.add(layer_2_multiplication, biases['b2']) layer_2 = tf.nn.relu(layer_2_addition) # Output layer out_layer_multiplication = tf.matmul(layer_2, weights['out']) out_layer_addition = out_layer_multiplication + biases['out'] return out_layer_addition#main#從sklearn.datas獲取數據cate = ["comp.graphics","sci.space","rec.sport.baseball"]newsgroups_train = fetch_20newsgroups(subset='train', categories=cate)newsgroups_test = fetch_20newsgroups(subset='test', categories=cate)# 計算訓練和測試數據總數vocab = Counter()for text in newsgroups_train.data: for word in text.split(' '): vocab[word.lower()]+=1 for text in newsgroups_test.data: for word in text.split(' '): vocab[word.lower()]+=1total_words = len(vocab)word2index = get_word_2_index(vocab)n_hidden_1 = 100 # 一層hidden層神經元個數n_hidden_2 = 100 # 二層hidden層神經元個數n_input = total_words n_classes = 3 # graphics, sci.space and baseball 3層輸出層即將文本分為三類#占位input_tensor = tf.placeholder(tf.float32,[None, n_input],name="input")output_tensor = tf.placeholder(tf.float32,[None, n_classes],name="output") #正態分布存儲權值和偏差值weights = { 'h1': tf.Variable(tf.random_normal([n_input, n_hidden_1])), 'h2': tf.Variable(tf.random_normal([n_hidden_1, n_hidden_2])), 'out': tf.Variable(tf.random_normal([n_hidden_2, n_classes]))}biases = { 'b1': tf.Variable(tf.random_normal([n_hidden_1])), 'b2': tf.Variable(tf.random_normal([n_hidden_2])), 'out': tf.Variable(tf.random_normal([n_classes]))}#初始化prediction = multilayer_perceptron(input_tensor, weights, biases)# 定義 loss and optimizer 采用softmax函數# reduce_mean計算平均誤差loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=prediction, labels=output_tensor))optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(loss)#初始化所有變量init = tf.global_variables_initializer()#部署 graphwith tf.Session() as sess: sess.run(init) training_epochs = 100 display_step = 5 batch_size = 1000 # Training for epoch in range(training_epochs): avg_cost = 0. total_batch = int(len(newsgroups_train.data) / batch_size) for i in range(total_batch): batch_x,batch_y = get_batch(newsgroups_train,i,batch_size) c,_ = sess.run([loss,optimizer], feed_dict={input_tensor: batch_x,output_tensor:batch_y}) # 計算平均損失 avg_cost += c / total_batch # 每5次epoch展示一次loss if epoch % display_step == 0: print("Epoch:", '%d' % (epoch+1), "loss=", "{:.6f}".format(avg_cost)) print("Finished!") # Test model correct_prediction = tf.equal(tf.argmax(prediction, 1), tf.argmax(output_tensor, 1)) # 計算準確率 accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float")) total_test_data = len(newsgroups_test.target) batch_x_test,batch_y_test = get_batch(newsgroups_test,0,total_test_data) print("Accuracy:", accuracy.eval({input_tensor: batch_x_test, output_tensor: batch_y_test}))

新聞熱點

疑難解答